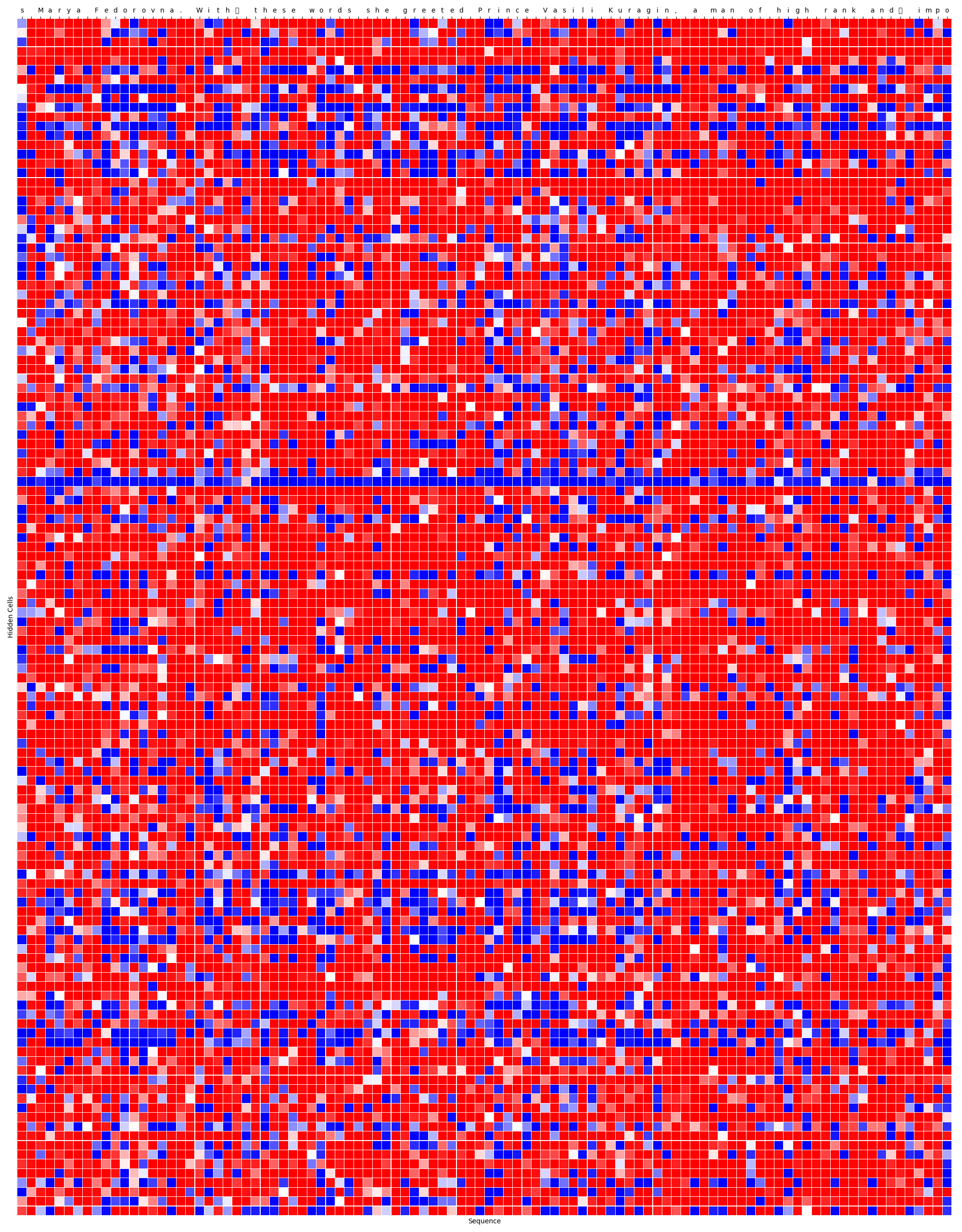

VisualizeInternalGates and the dataset in VisualizeWarAndPeaceDataset. You can just build your model and dataset from these two classes.)data/war_and_peace_visualize.txt, including update gate, reset gate and internal cell candidates.VisualizeInternalGates and VisualizeGRUCell.

More specifically, you should look for general patterns in the figure. For example, in the figure below for update gate, each row represents one hidden cell and each column represents characters in the sequence (after each character being processed). You should look at the image zoomed-out and look for thick columns that are generally more different than the surroundings.