CS 1678/2078: Homework 3

Due: 3/21/2024, 11:59pm

This assignment is worth 50 points.

You will write your code and any requested responses or descriptions of your results, in a Colab Jupyter notebook. Starter code is provided, for Parts A and B and Parts C and D.

You need to save a copy in your own Google Drive so that you can edit each notebook.

Please spend some time (2-3 hours) to read our starter code before you start working. Please leave a few days for training (for example, one epoch in Part A takes about 30 min). Excluding the time for training the models, we expect this assignment to take 7-10 hours.

Data downloads are automatically handled in the starter files.

Part A: Implement AlexNet - Winner of ILSVRC 2012 (10 points)

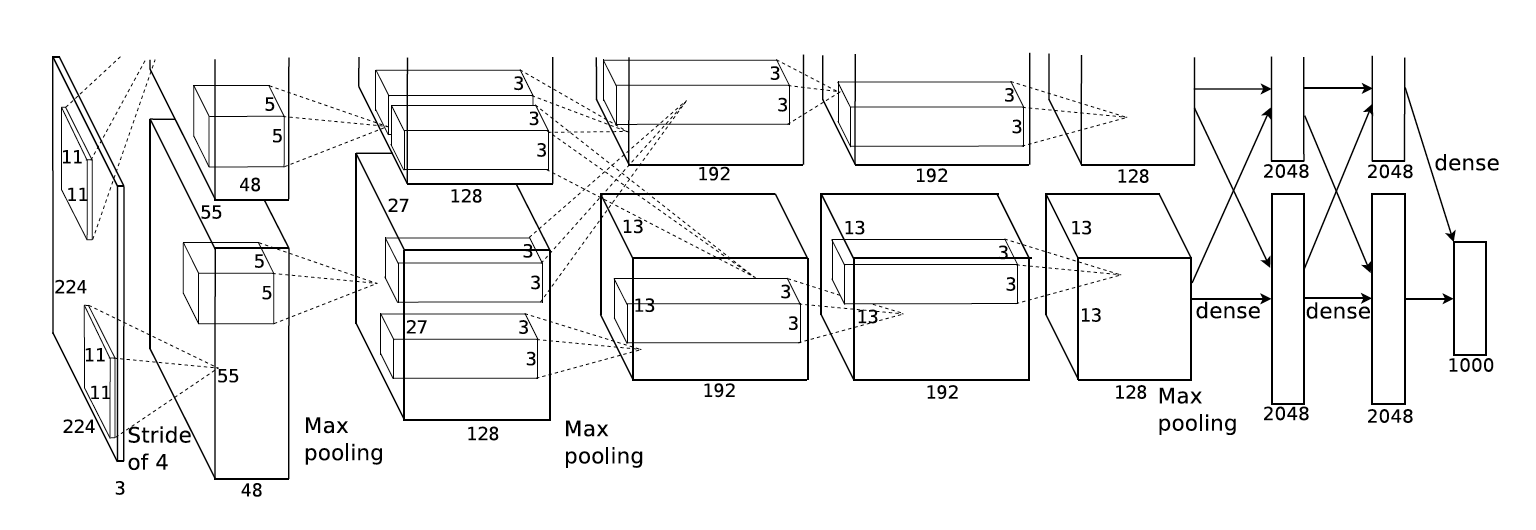

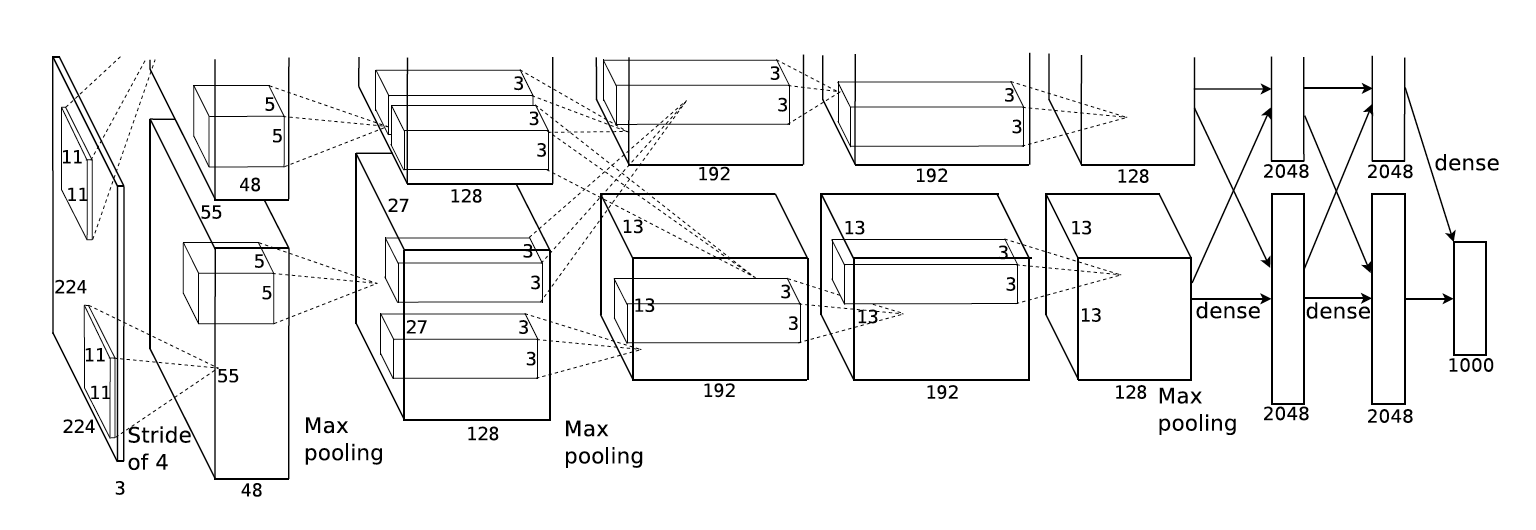

AlexNet is a milestone in the resurgence of deep learning, and it

astonished the computer vision community by winning the ILSVRC 2012 by a

large margin.

In this assignment, you need to implement the original AlexNet using PyTorch.

The model architecture is shown in the following figure, which is from their original paper.

More specifically, your AlexNet should have the following architecture:

================================================================================================================

Layer (type) Kernel Padding Stride Dilation Output Shape Param #

----------------------------------------------------------------------------------------------------------------

Conv2d-1 11 x 11 4 [-1, 96, 55, 55] 34,944

ReLU-2 [-1, 96, 55, 55] 0

MaxPool2d-3 3 2 [-1, 96, 27, 27] 0

Conv2d-4 5 x 5 2 [-1, 256, 27, 27] 614,656

ReLU-5 [-1, 256, 27, 27] 0

MaxPool2d-6 3 2 [-1, 256, 13, 13] 0

Conv2d-7 3 x 3 1 [-1, 384, 13, 13] 885,120

ReLU-8 [-1, 384, 13, 13] 0

Conv2d-9 3 x 3 1 [-1, 384, 13, 13] 1,327,488

ReLU-10 [-1, 384, 13, 13] 0

Conv2d-11 3 x 3 1 [-1, 256, 13, 13] 884,992

ReLU-12 [-1, 256, 13, 13] 0

MaxPool2d-13 3 2 [-1, 256, 6, 6] 0

Flatten-14 [-1, 9216] 0

Dropout-15 [-1, 9216] 0

Linear-16 [-1, 4096] 37,752,832

ReLU-17 [-1, 4096] 0

Dropout-18 [-1, 4096] 0

Linear-19 [-1, 4096] 16,781,312

ReLU-20 [-1, 4096] 0

Linear-21 [-1, 4] 16,388

================================================================================================================

Note: The `-1` in Output shape represents `batch_size`, which is flexible during program execution.

Some tips:

Instructions:

-

Complete the implementation of class AlexNet, and training the model for domain prediction task.

Flags for sample usage (e.g. learning rate) are provided in the starter file. Run training for 3-5 epochs; you should be able to achieve 80% accuracy. You may need to tune the hyperparamters to achieve better performance.

- Report the model architecture (i.e. call print(model) and copy/paste the output in your notebook), as well as the accuracy on the validation set.

Part B: Enhancing AlexNet (10 points)

In this part, you need to modify the AlexNet in previous part, and train different models with the following changes. It should be very easy (e.g. just changing a few lines) to perform the following modifications. For both subparts, please use the optimal hyperparameters from Part A to train the new model, and report architecture and accuracy in a text snippet in your notebook, and in your report.

Instructions:

- Larger kernel size:

The original AlexNet has 5 convolutional kernels as defined in the table in Part A.

We observe that for the 1st, 2nd and 5th convolutional layers, a MaxPool2d layer is followed to downsample the inputs.

An alternative strategy is to use larger convolutional kernel (thus

larger receptive field) and larger stride, which gives smaller output

directly.

Please copy your AlexNet to a new class named AlexNetLargeKernel, and implement the model following the architectures given below.

class AlexNetLargeKernel

================================================================================================================

Layer (type) Kernel Padding Stride Dilation Output Shape Param #

----------------------------------------------------------------------------------------------------------------

Conv2d-1 21 x 21 1 8 [-1, 96, 27, 27] 127,104

ReLU-2 [-1, 96, 27, 27] 0

Conv2d-3 7 x 7 2 2 [-1, 256, 13, 13] 1,204,480

ReLU-4 [-1, 256, 13, 13] 0

Conv2d-5 3 x 3 1 [-1, 384, 13, 13] 885,120

ReLU-6 [-1, 384, 13, 13] 0

Conv2d-7 3 x 3 1 [-1, 384, 13, 13] 1,327,488

ReLU-8 [-1, 384, 13, 13] 0

Conv2d-9 3 x 3 2 [-1, 256, 6, 6] 884,992

ReLU-10 [-1, 256, 6, 6] 0

Flatten-11 [-1, 9216] 0

Dropout-12 [-1, 9216] 0

Linear-13 [-1, 4096] 37,752,832

ReLU-14 [-1, 4096] 0

Dropout-15 [-1, 4096] 0

Linear-16 [-1, 4096] 16,781,312

ReLU-17 [-1, 4096] 0

Linear-18 [-1, 4] 16,388

================================================================================================================

- Pooling strategies:

Another tweak to the AlexNet is the pooling layer. Instead of MaxPool2d another common pooling strategy is AvgPool2d, i.e. to average all the neurons in the receptive field.

Please copy your AlexNet to a new class named AlexNetAvgPooling, and implement the model following the architectures given below.

class AlexNetAvgPooling

================================================================================================================

Layer (type) Kernel Padding Stride Dilation Output Shape Param #

----------------------------------------------------------------------------------------------------------------

Conv2d-1 11 x 11 4 [-1, 96, 55, 55] 34,944

ReLU-2 [-1, 96, 55, 55] 0

AvgPool2d-3 3 2 [-1, 96, 27, 27] 0

Conv2d-4 5 x 5 2 [-1, 256, 27, 27] 614,656

ReLU-5 [-1, 256, 27, 27] 0

AvgPool2d-6 3 2 [-1, 256, 13, 13] 0

Conv2d-7 3 x 3 1 [-1, 384, 13, 13] 885,120

ReLU-8 [-1, 384, 13, 13] 0

Conv2d-9 3 x 3 1 [-1, 384, 13, 13] 1,327,488

ReLU-10 [-1, 384, 13, 13] 0

Conv2d-11 3 x 3 1 [-1, 256, 13, 13] 884,992

ReLU-12 [-1, 256, 13, 13] 0

AvgPool2d-13 3 2 [-1, 256, 6, 6] 0

Flatten-14 [-1, 9216] 0

Dropout-15 [-1, 9216] 0

Linear-16 [-1, 4096] 37,752,832

ReLU-17 [-1, 4096] 0

Dropout-18 [-1, 4096] 0

Linear-19 [-1, 4096] 16,781,312

ReLU-20 [-1, 4096] 0

Linear-21 [-1, 4] 16,388

================================================================================================================

Part C: Sentiment analysis on IMDB reviews (15 points)

Large Movie Review Dataset (IMDB) is a dataset for binary sentiment

classification with 50,000 movie reviews. We have split

the dataset into training (45,000) and test (5,000). The

positive:negative ratio is 1:1 in both splits.

In this task, you need to develop a RNN model to "read" each review then predict whether it's positive or negative.

In the provided starter code, we implemented a RNN pipeline (sentiment_analysis.py) and a GRUCell (rnn_modules.py) as an example.

You need to build one other variant of a RNN module, specifically as explained in this blog. You are not allowed to use the native torch.nn.LSTMCell or other built-in RNN modules in this assignment.

Instructions:

- Read the starter code (RNN Modules and Sentiment Analysis). Then run the training with provided GRU cell. You should be able to

achieve at least 85% accuracy in 50 epochs with default hyperparameters.

Attach the figures (either TensorBoard screenshot or plot on your

own) of (1) training loss, (2) training accuracy per epoch and (3)

validation accuracy per epoch in your report.

- Implement

LSTMCell: a classical LSTM with input gate, forget gate, cell state and hidden state. Details are in the blog, the section named Variants on Long Short Term Memory. Please note that the class is already provided for you, and you only need to complete the __init__ and forward functions. Please do NOT change the signatures.

- Print number of model parameters for different module type (

GRUCell and LSTMCell) using count_parameters, and include the comparison in your notebook and report.

Use the following hyperparameters for comparison: input_size=128, hidden_size=100, bias=True.

- Run experiments with your custom implementation on

sentiment analysis with IMDB dataset, and compare the results with the

GRU, including both speed and performance.

Include the training loss and training/validation accuracy plots in your notebook and report.

Part D: Building a Shakespeare writer (15 points)

RNNs have demonstrated great potential in modeling language, and one

interesting property is that they can "learn" to generate new sentences.

In this task, you will develop a character-based RNN model (meaning

instead of words, RNN processes one character at a time) to learn how

to write like Shakespeare.

Instructions:

- Complete the

SentenceGeneration class which is a character-level RNN.

You can reuse most of the codes from SentimentClassification

in Part C, just note that instead of predicting positive or negative,

now your task is to predict the next character given a sequence of characters

(history).

- Train the model with the GRU module on Shakespeare books.

You should be able to achieve loss value of 1.2 in 10 epochs with

default hyperparameters. You probably need to use an embedding_dim of

256 and hidden_size of 512 to get the above loss value. If you are

interested you could try with your own LSTM variant, but experiments

with GRU are required.

- Complete the function in sentence_generation.py to load your trained model and generate a new sentence from it.

Once a language model is trained, it is able to predict

the next character after a sequence, and this process can be continued

(predicted character serves as history for predicting the next).

More specifically, your model should be able to predict the

probability distribution over the vocabulary for the next character, and

we have implemented a sampler

sample_next_char_id which

samples according to the probability. By repeating this process, your

model is able to write arbitrarily long paragraphs.

For example the following passage is written by a GRU trained on Shakespeare. Note that the model learns how to spell each word and write the

sentence-like paragraphs all by itself, even including the punctuation

and line breaks.

ROMEO:Will't Marcius Coriolanus and envy of smelling!

DUKE VINCENTIO:

He seems muster in the shepherd's bloody winds;

Which any hand and my folder sea fast,

Last vantage doth be willing forth to have.

Sirraher comest that opposite too?

JULIET:

Are there incensed to my feet relation!

Down with mad appelate bargage! troubled

My brains loved and swifter than edwards:

Or, hency, thy fair bridging courseconce,

Or else had slept a traitors in mine own.

Look, Which canst thou have no thought appear.

ROMEO:

Give me them: for that I put us empty.

RIVERS:

The shadow doth not live: and he would not

From thee for his that office past confusion

Is their great expecteth on the wheek;

But not the noble fathom were an poison

Here come to make a dukedom: therefore--

But O, God grant! for Signior HERY

VI:

Soft love, that Lord Angelo: then blaze me all;

And slept not without a Calivan Us.

- Please use ROMEO and JULIET as history to begin the generation for 1000 characters each, and include the generated text in your notebook and report.

Submission: Please submit your Jupyter notebook with the classes and functions specified.

Acknowledgement: This assignment was designed by Mingda Zhang in Spring 2020 for CS1699: Intro to Deep Learning, with minor updates in 2024.